- DataMigration.AI

- Posts

- The Push for Zero-Loss Migration Standards

The Push for Zero-Loss Migration Standards

Good Enough Isn't Good Enough

What’s in it?

High failure rates in enterprise data migration pose severe operational and compliance risks.

An automated, component-based approach replaces error-prone manual methods.

Using tools like PowerShell and metadata-driven systems ensures zero data loss and faster processing.

Automation extends beyond transfer to include validation, reporting, and administrative tasks.

The methodology integrates robust security controls and compliance frameworks for regulated industries.

This proven approach is becoming the new standard for efficient and reliable large-scale data transfers.

Imagine your enterprise systems managing billions of critical records that sustain daily business operations. Now, consider the immense risk when you need to migrate this vital data.

Failure rates remain alarmingly high, where a single corrupted file or lost database entry can halt production lines, postpone major product launches, or irrevocably erase years of research.

In heavily regulated sectors like finance or healthcare, a data mishap can escalate into multi-million dollar compliance penalties.

The statistics confronting you are stark: research indicates that a staggering 83% of data migrations either fail outright or blow past their allocated budgets and timelines.

Fewer than 70% are deemed successful, with 64% exceeding their budget and a mere 46% delivered on time. This paints a clear picture: most organisations still depend on error-prone manual processes, setting the stage for systemic failures.

However, a new approach is emerging, pioneered by technical leaders who have decided that zero data loss during large-scale enterprise migrations is not impossible but essential.

They are blending deep database management expertise with cutting-edge automation tools, creating repeatable methodologies that are becoming industry best practices.

You can learn from the experience of professionals like Manikanteswara Yasaswi Kurra, who encountered the brutal cost of migration failures early in his career at a healthcare technology firm.

When the parent company decided to spin off its R&D division, he was tasked with immediately moving years of irreplaceable research data. This included mission-critical assets like Ironwood sample records and NovaSTB databases containing thousands of experimental results.

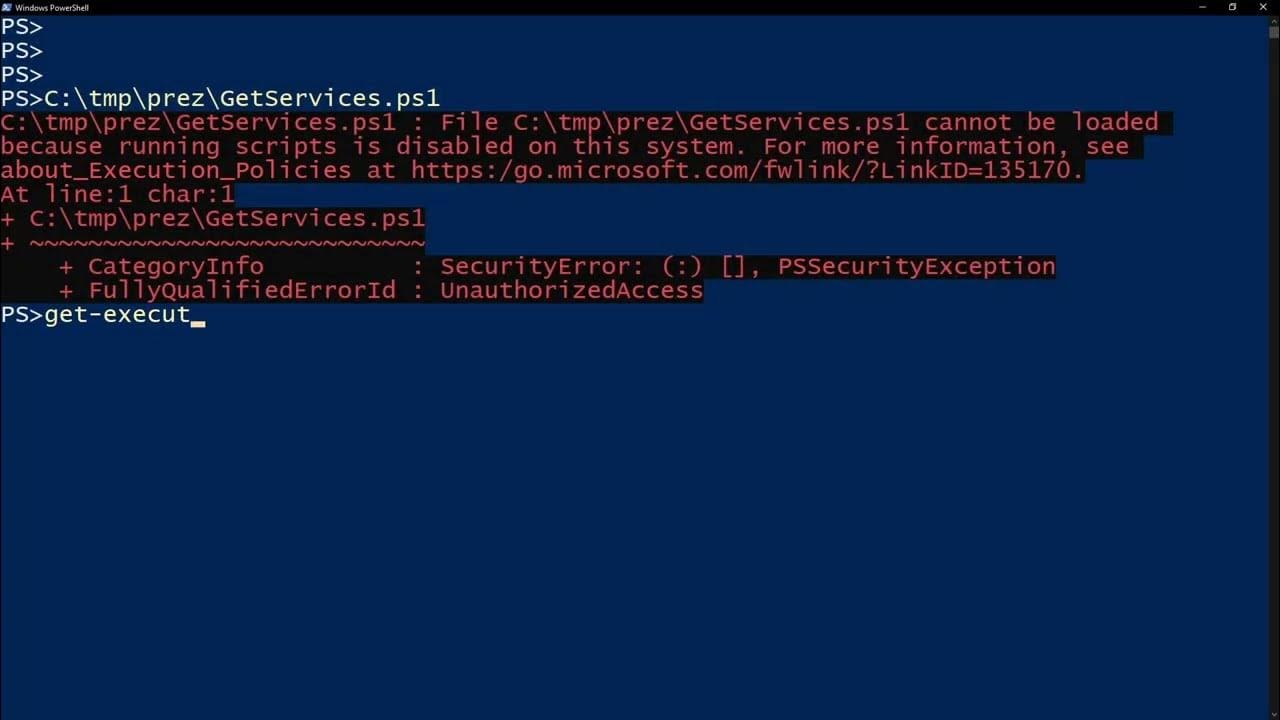

The conventional migration path stretched over months and carried a high probability of data corruption, a risk you cannot afford. Instead, he engineered a novel solution using scheduled server jobs and PowerShell scripts to fully automate the process.

This method innovatively created metadata-driven columns within SharePoint. The result wasn't just safe data transfer; it transformed the data into a more discoverable and valuable asset, making information retrieval significantly faster.

The outcome surpassed all expectations, achieving zero data loss while slashing request approval times from days to mere hours, a processing improvement of over 60%.

Perhaps most tellingly, the metadata-driven governance model proved so effective that other teams across the organisation adopted it for their own data management challenges.

"When you're handling years of research data, there's no room for error," he notes, a principle you must adopt.

Legacy migration methods often accept a certain level of data loss as inevitable, but in regulated environments, that's simply unacceptable. We had to architect systems that guaranteed complete data integrity while simultaneously enhancing operational efficiency.

Breaking the Automation Barrier

The triumph of this initial migration catalysed more ambitious automation initiatives. He developed Java-based APIs and Spring Boot synchronisation jobs to seamlessly connect SAP project codes and employee data with laboratory information management systems.

This integration eradicated duplicate manual data entry, boosting accuracy by 40% and finally enabling collaboration between departments that had historically worked in data silos.

The technical methodology you should consider employs a multi-layered framework of verification and automation. Rather than transferring data in monolithic, difficult-to-verify batches, the approach decomposes the migration into smaller, verifiable components.

Each component undergoes automated validation before, during, and after its transfer using specialised scripts that check for data completeness and correctness.

PowerShell scripts manage the physical data movement, while the metadata architecture in SharePoint provides essential structure and enhances searchability.

Crucially, automated validation checkpoints are established throughout the pipeline, ensuring any anomaly is caught and remediated before it can propagate and disrupt downstream processes.

This method demands greater upfront planning from you, but it eliminates the costly delays and complex data recovery efforts that plague traditional migration projects.

Industry research corroborates that strategic automation can reduce costs by 40% to 75% while improving organisational efficiency. His work delivers these benefits in practice, extending automation beyond mere data transfer to encompass routine administrative functions.

He built systems to automate the creation of JIRA templates and migration tasks, saving hundreds of manual work hours annually and reducing reporting time by over 40%.

These efficiencies allow your technical staff to focus on higher-value innovation while ensuring routine processes execute consistently and reliably every time.

Furthermore, he implemented global Power BI dashboards that provide cross-laboratory visibility into service requests, resource allocation, and workload distribution.

For multinational organisations, this was a breakthrough, giving managers their first real-time, holistic view of operational data across all global facilities.

These dashboards incorporate AI-driven analysis of customer feedback, generating automated insights that directly inform leadership decisions on future technology investments.

"The goal was never just to move data from point A to point B," he explains.

We aimed to create systems that amplified the data's inherent value and accessibility while eliminating the manual toil that breeds errors and causes delays, a philosophy you should embed in your projects.

Ensuring Security and Compliance at Scale

This methodology also directly addresses the security complexities that often paralyse enterprise migrations.

By collaborating closely with Security and Infrastructure teams, he ensured all migration processes incorporated robust encryption, strict access controls, and comprehensive audit logging aligned with Zero Trust security principles.

This security-by-design mindset makes the approach viable for highly regulated industries where data breaches have severe repercussions.

He also partnered with validation leads to develop a risk-based validation framework, now adopted as a best practice in regulated environments.

This framework provides you with a structured methodology for testing and verification, guaranteeing compliance requirements are met at every stage of the migration lifecycle.

The techniques forged in these high-stakes migrations are now influencing how other organisations tackle similar challenges.

The risk-based validation framework and the metadata-driven SharePoint governance model are being utilised by teams managing diverse enterprise data types, demonstrating their versatility far beyond the original use case.

This widespread adoption signals an industry-wide shift toward intelligent automation and deep integration.

Forward-thinking companies recognise that manual data management processes cannot scale to meet exploding data volumes and escalating regulatory demands.

The proven success of zero-loss migrations demonstrates to you that it is indeed possible to achieve perfect data integrity alongside superior operational efficiency through meticulous system design and strategic automation.

Recognition for this transformative work extends beyond organisational boundaries. He received the Best Employee Award for Customer Delight Champion, a testament to how innovative technical solutions dramatically improve experiences for both internal users and external customers.

The automation and integration frameworks now serve as benchmarks for compliance-driven industries, with subsequent software releases adopting these approaches for their proven scalability and impact.

An Industry Transformation on the Horizon

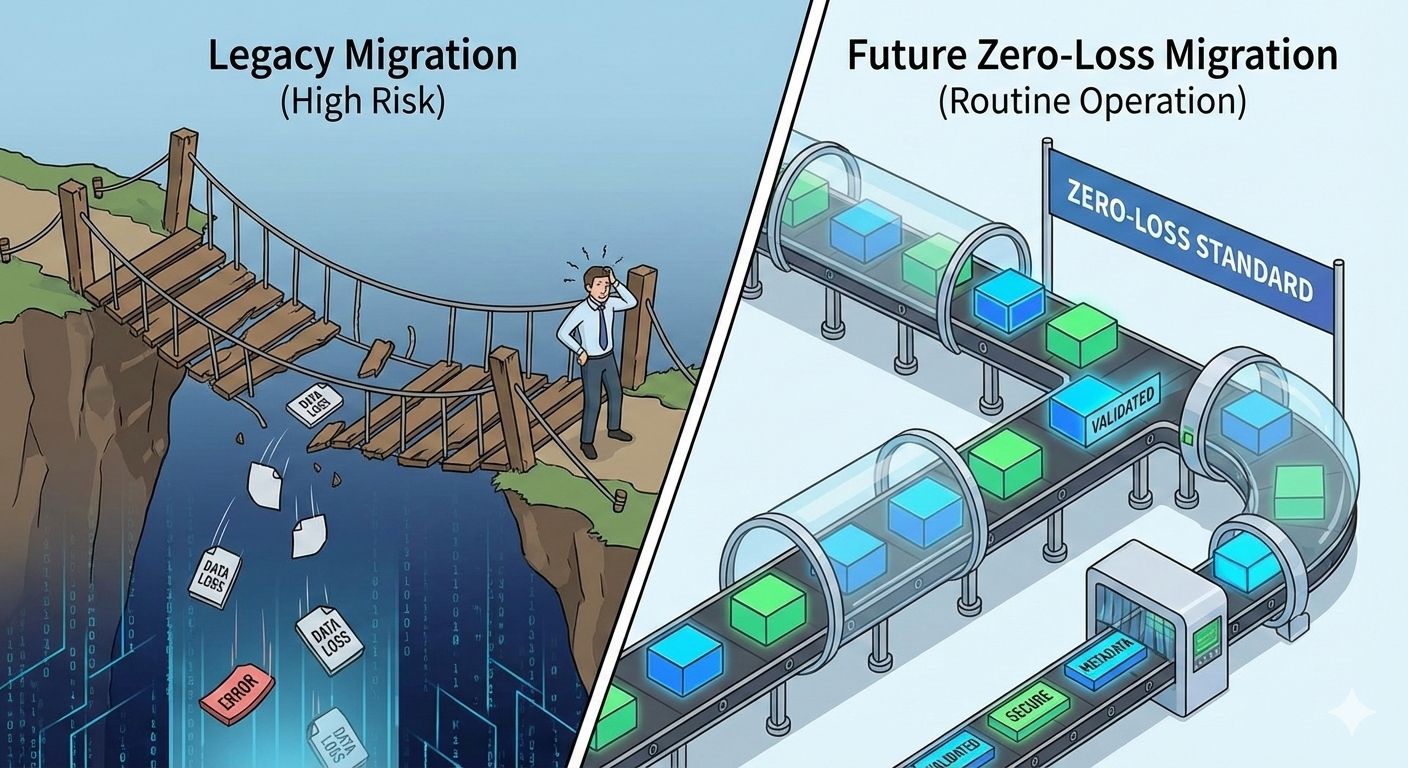

Looking ahead, the synthesis of automated validation, metadata-driven organisation, and integrated security controls points toward a future where you can execute large-scale data migrations as routine operations rather than high-risk endeavours.

As your enterprise modernises legacy systems and migrates to cloud platforms, the methodologies proven in these projects provide a reliable template for preserving absolute data integrity while boosting operational efficiency.

This paradigm shift, from tacitly accepting data loss as normal to demanding zero-loss migrations, represents more than a technical upgrade. It reflects a mature understanding that enterprise data is far too valuable to gamble with during system transitions.

It proves that thoughtful automation can eliminate human error while making systems intrinsically more efficient and user-friendly.

Organisations that implement these approaches report substantial gains in both operational performance and regulatory confidence.

The metadata-driven governance models and automated validation frameworks pioneered through this work are being cited in industry publications and adopted across various sectors, strongly suggesting that zero-loss migration is poised to become the new standard by which you will be measured.

Thank you for reading

DataMigration.AI & Team